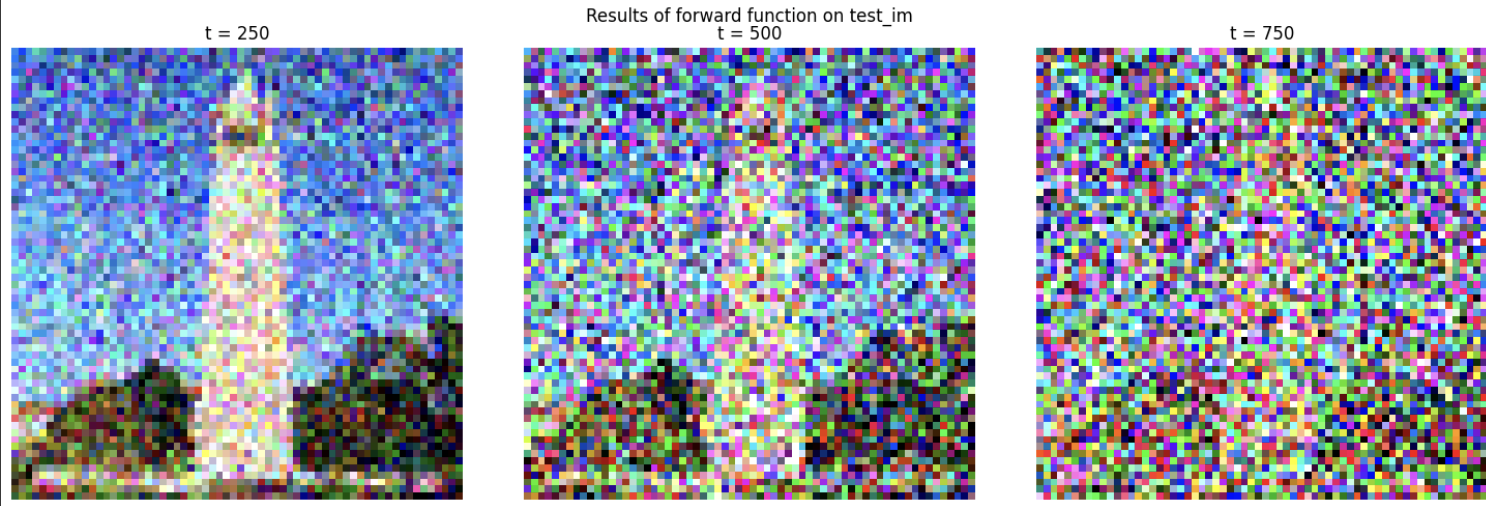

Noising Forward

In this section we explore the forward process for adding noise to an image. We iteratively apply noise using torch.randn_like from t = 0 to t = 1000. At each step we sample the random noise and add the array to the image array at t-1. The results are below.

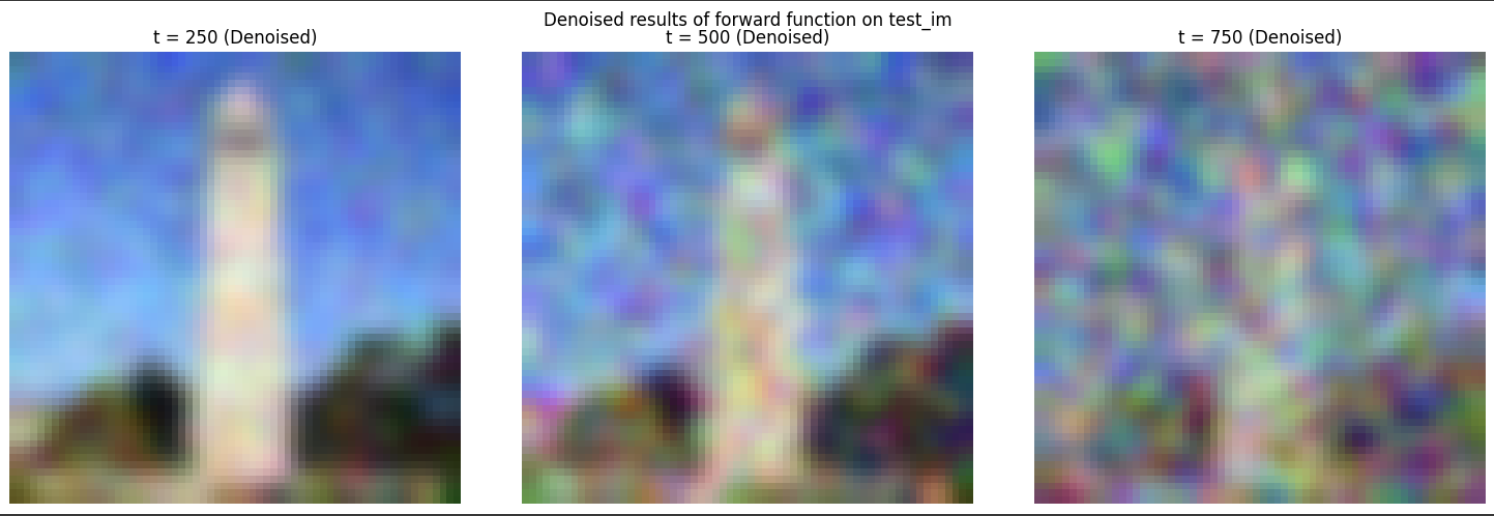

Calassical Denoising

In this section we explore the classical denoising algorithm. As we have seen in previous projects, the normal blur can function as a low pass filter which is effective at removing some of the noise. as t increments, you can see this technique struggle.

Here we apply the torch torchvision.transforms.functional.gaussian_blur function to the image at each step.

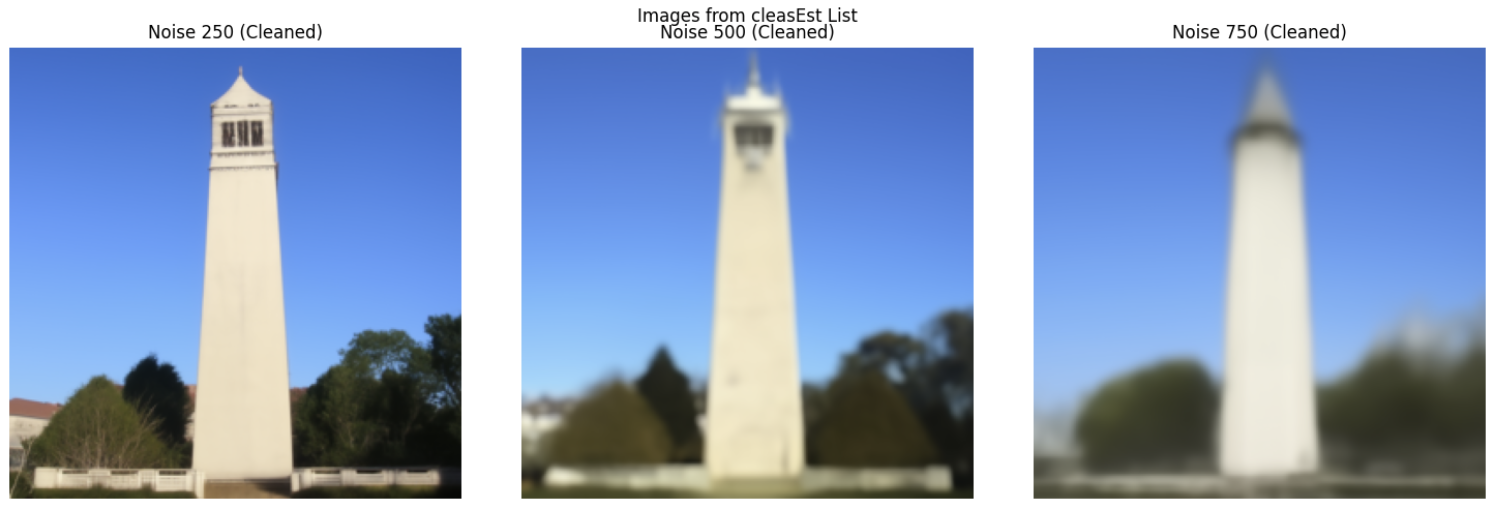

One step Denoise

We now explore the use of the Unet to solve the denoising process where the normal blurring failed. We one shot denoise the noisy image with the prompt "a high quality image" at multiple time steps. The results are below.

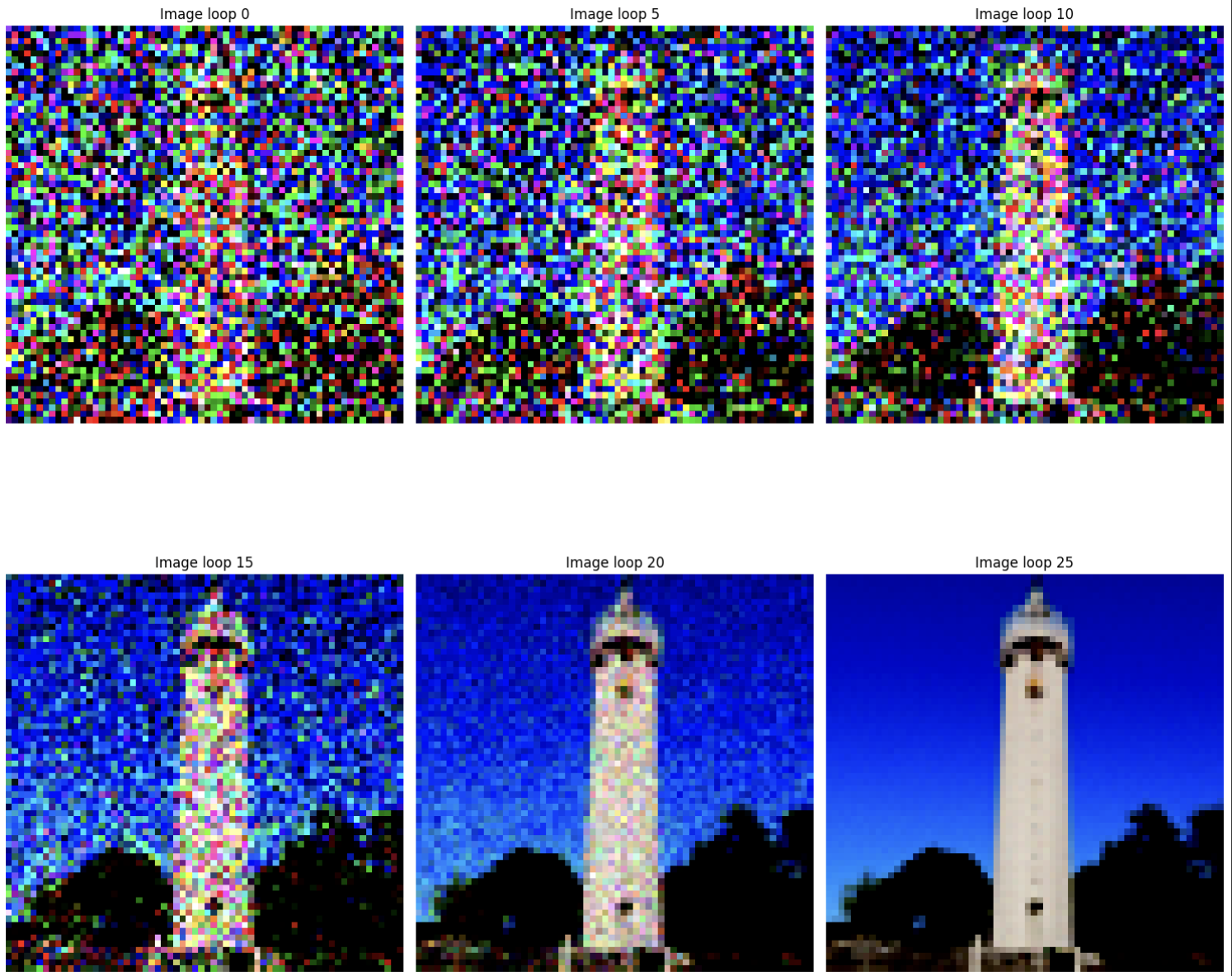

Iterative Denoise

Extending on the idea from earlier, we are iteratively denoising the image with the prompt "a high quality image" at multiple time steps. Each timestep incrementally moves the random noise closer to the real image manifold.

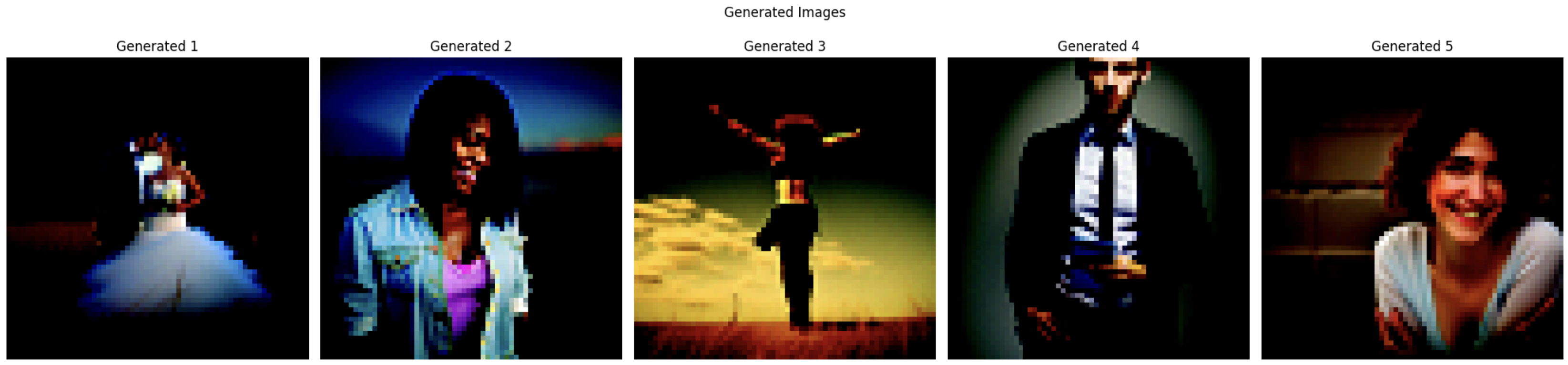

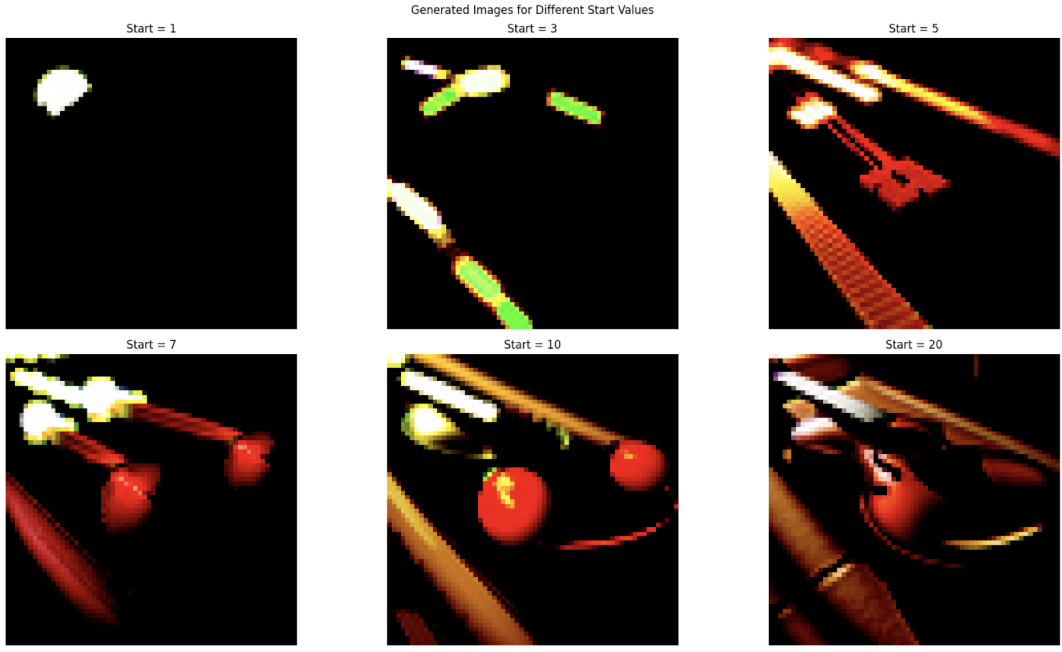

Diffusion Sampling

Given the image below, we can see the effect of diffusion sampling. We generate a sample of 5 images from the the Unet We start at timestpe = 0 and end at timestep = 1000. the input is a completely random image.

Classifier Free Guidance

At the expensive of image diversity, we can apply classifier free guidance to the Unet. This streamlines the error model with this funciton

ϵ = ϵ_u + γ(ϵ_c - ϵ_u) The error unconditional is simply the output of the Unet with an empty prompt(e_u). The results are below.

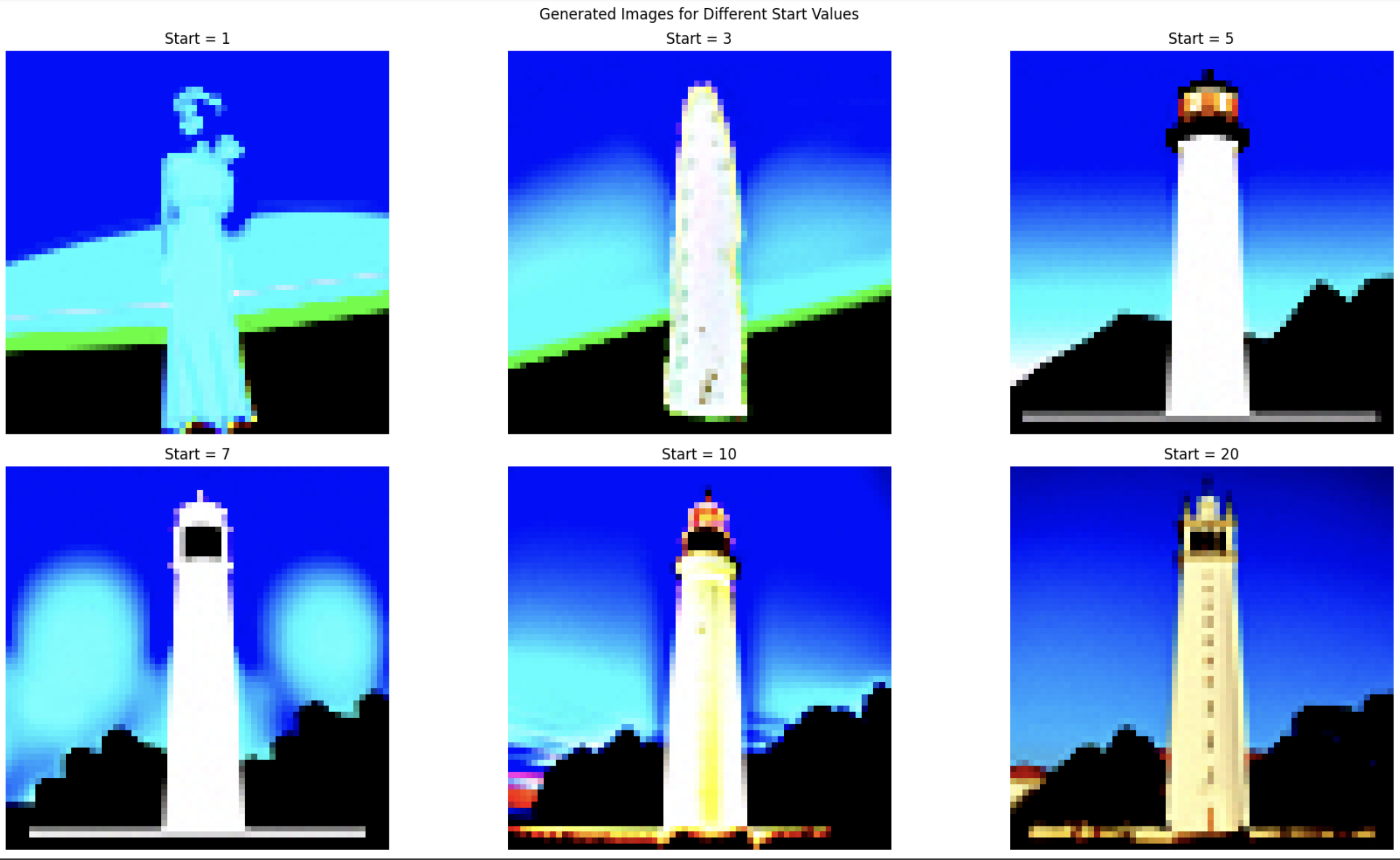

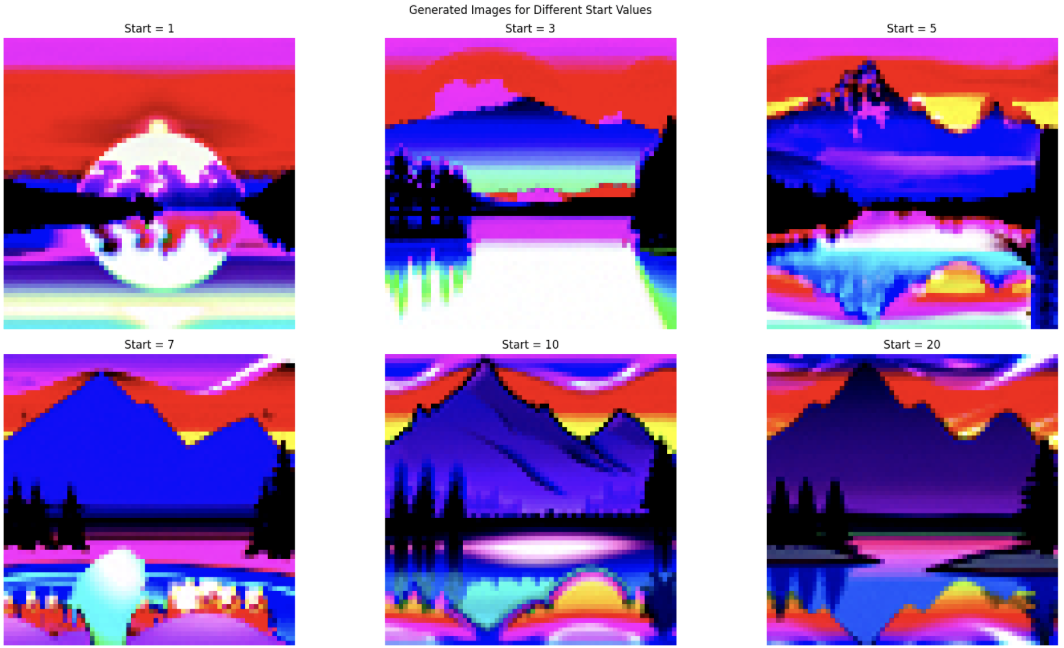

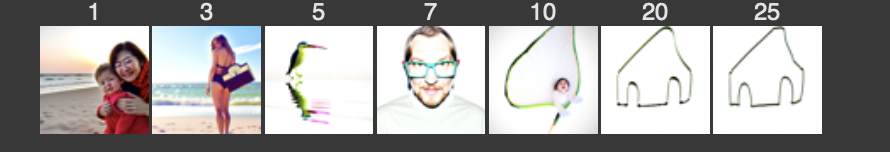

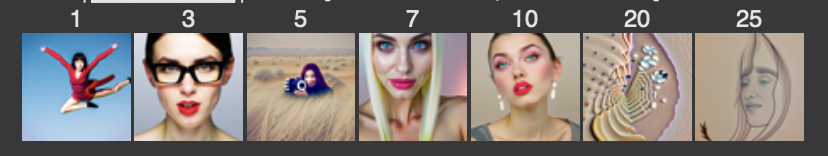

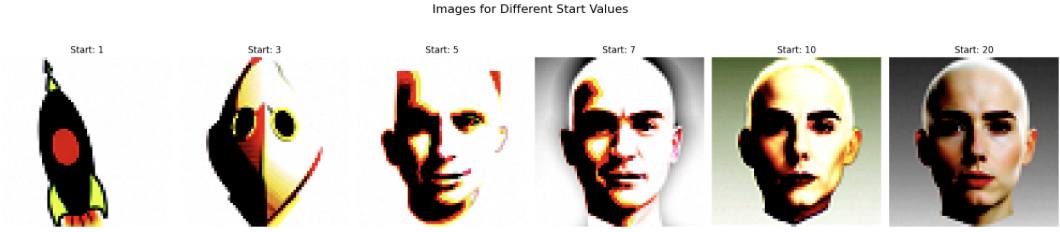

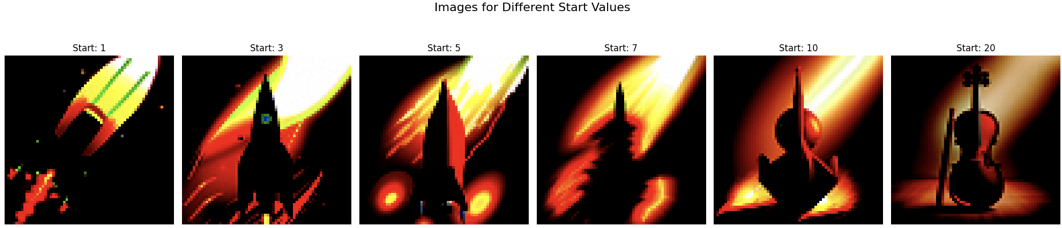

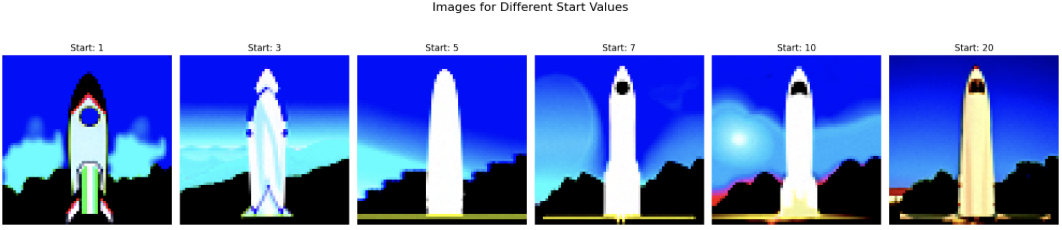

Image to Image translation

We can make edits to an image by adding noise to the image and applying UNet to teh noisy image. In the images below we begin teh diffusion process at varying noise levels. We then reconstruct the image allowing teh algorithm to make edits in the process.

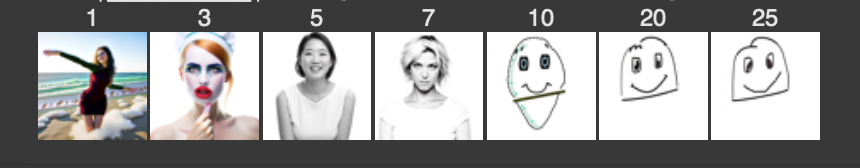

Hand Drawn Images

We then apply the algorithm above to hand drawn images with the prompt "a high quality image". The results are below.

In Painting

In this section we use masks to fill certain sections of an image with a diffeernet image. This is achieved by processing steps of the image with the equation below. $$ x_t \leftarrow \mathbf{m} x_t + (1 - \mathbf{m}) \, \text{forward}(x_{\text{orig}}, t) $$

Text to Image Translation

In this section we make edits to an image by guiding it with a specfic prompt instead of "a high quality image". The examples are below

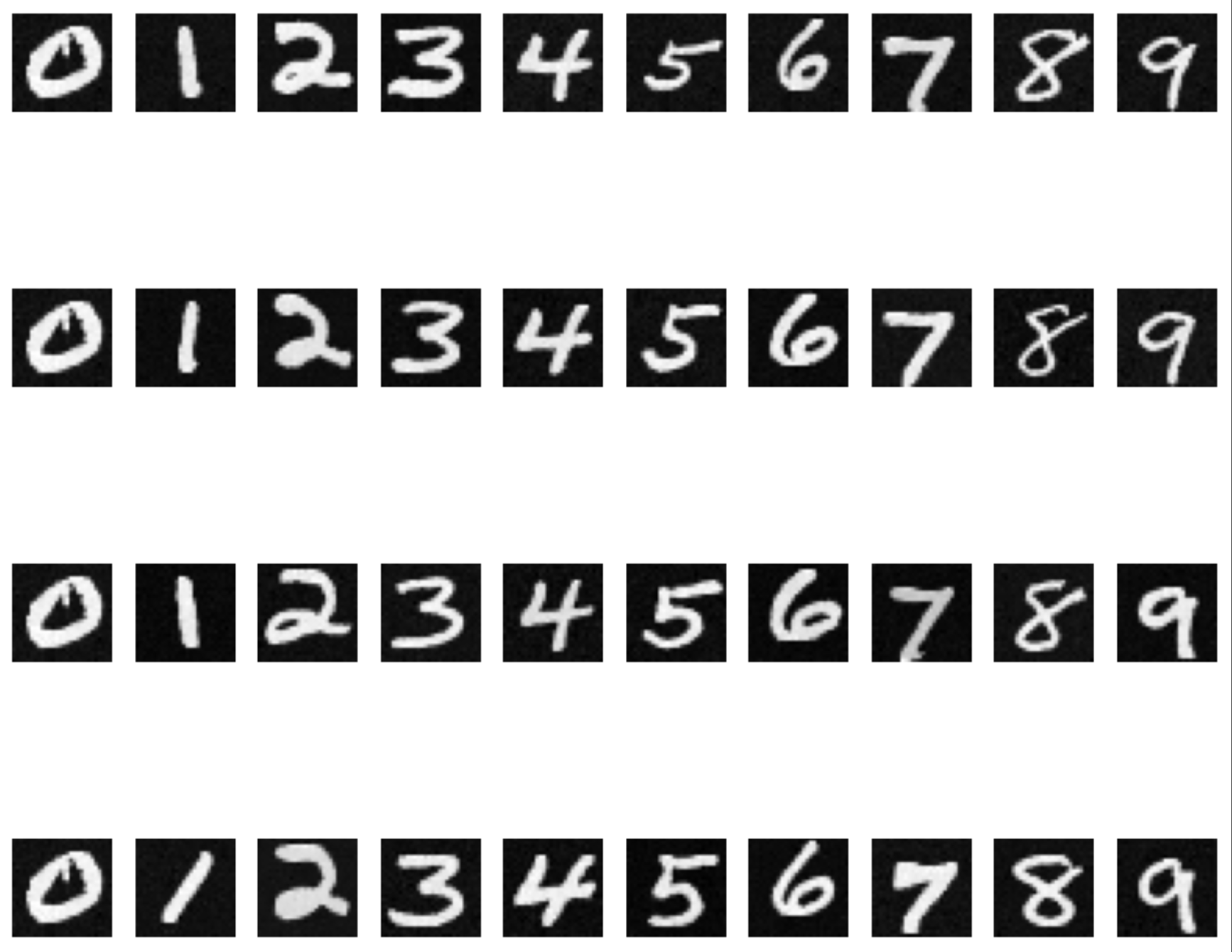

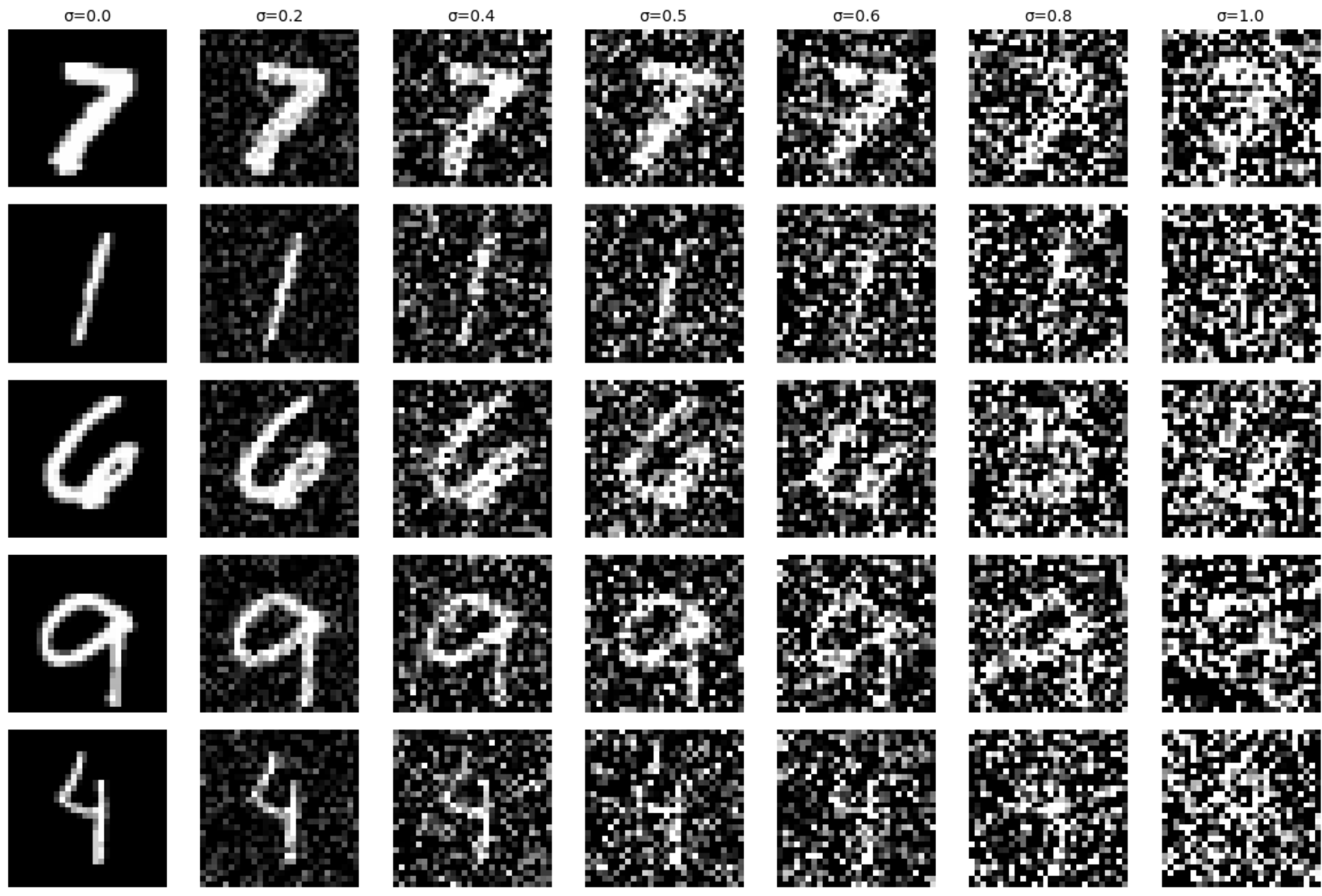

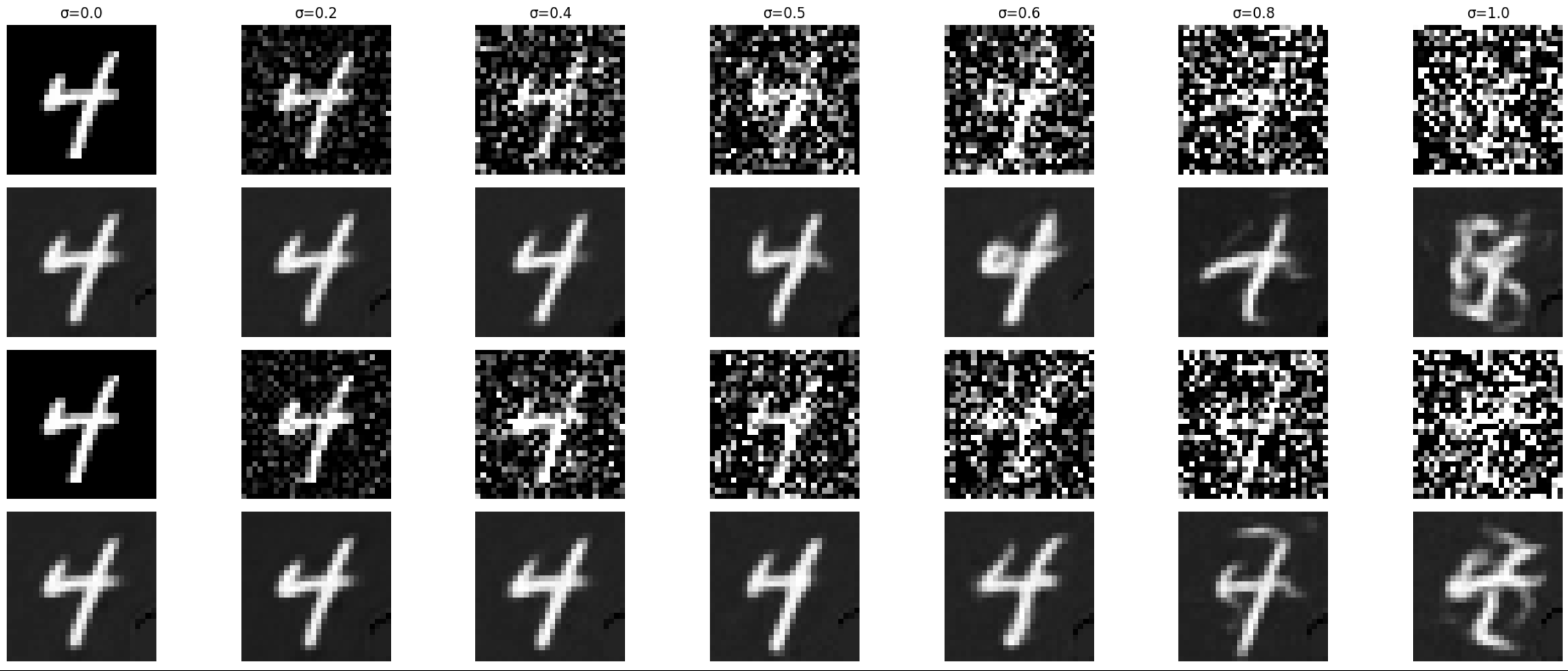

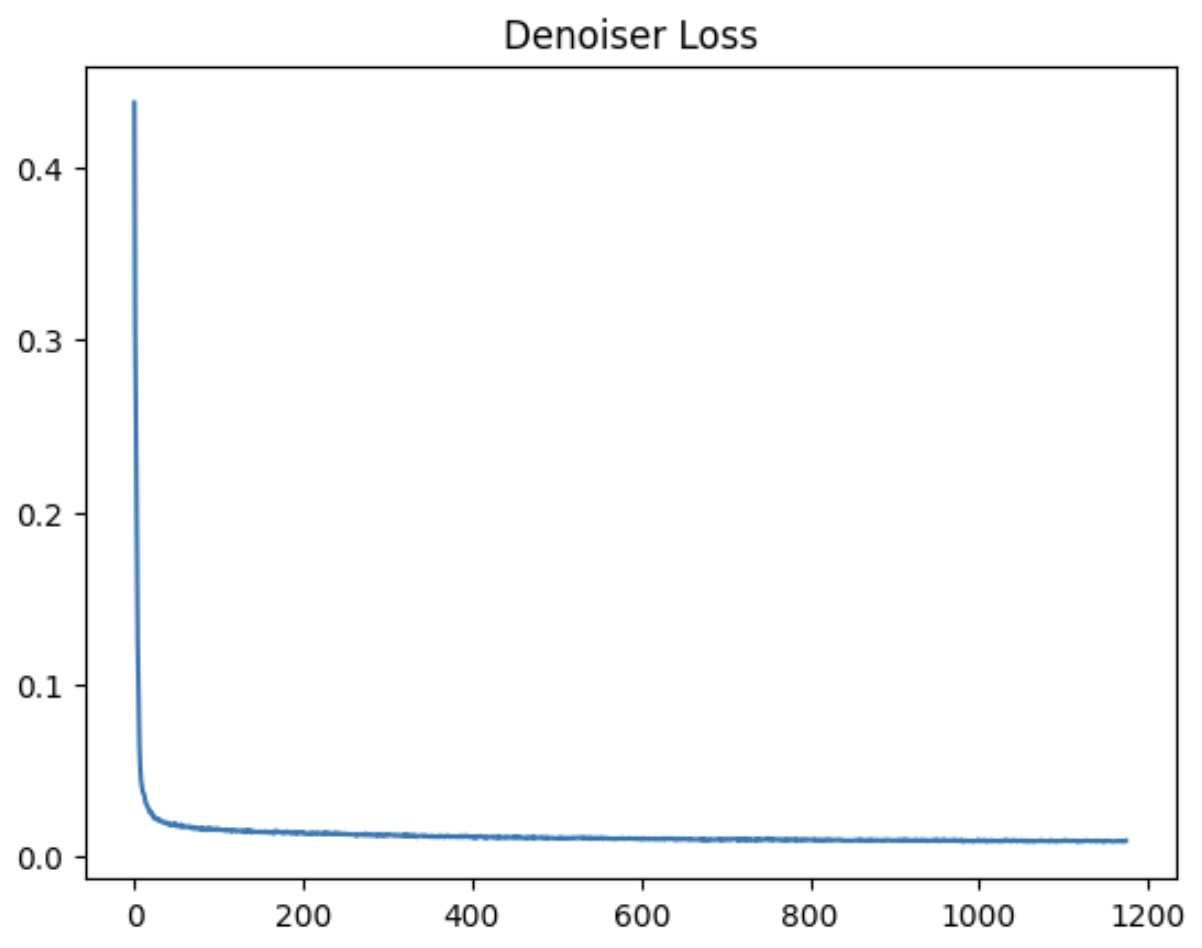

MNIST Denoiser

In this section we build out the Unet archetecture in Pytorch for teh MNISt data set. We initially train the Unet on the MNIST data set with noise ration of alphe = 0.5. We then assess teh quality of the denoiser on teh test set using multiple different alphas as displayed below. The subplot shows epoch 1 on top and epoch 5 at the bottom.

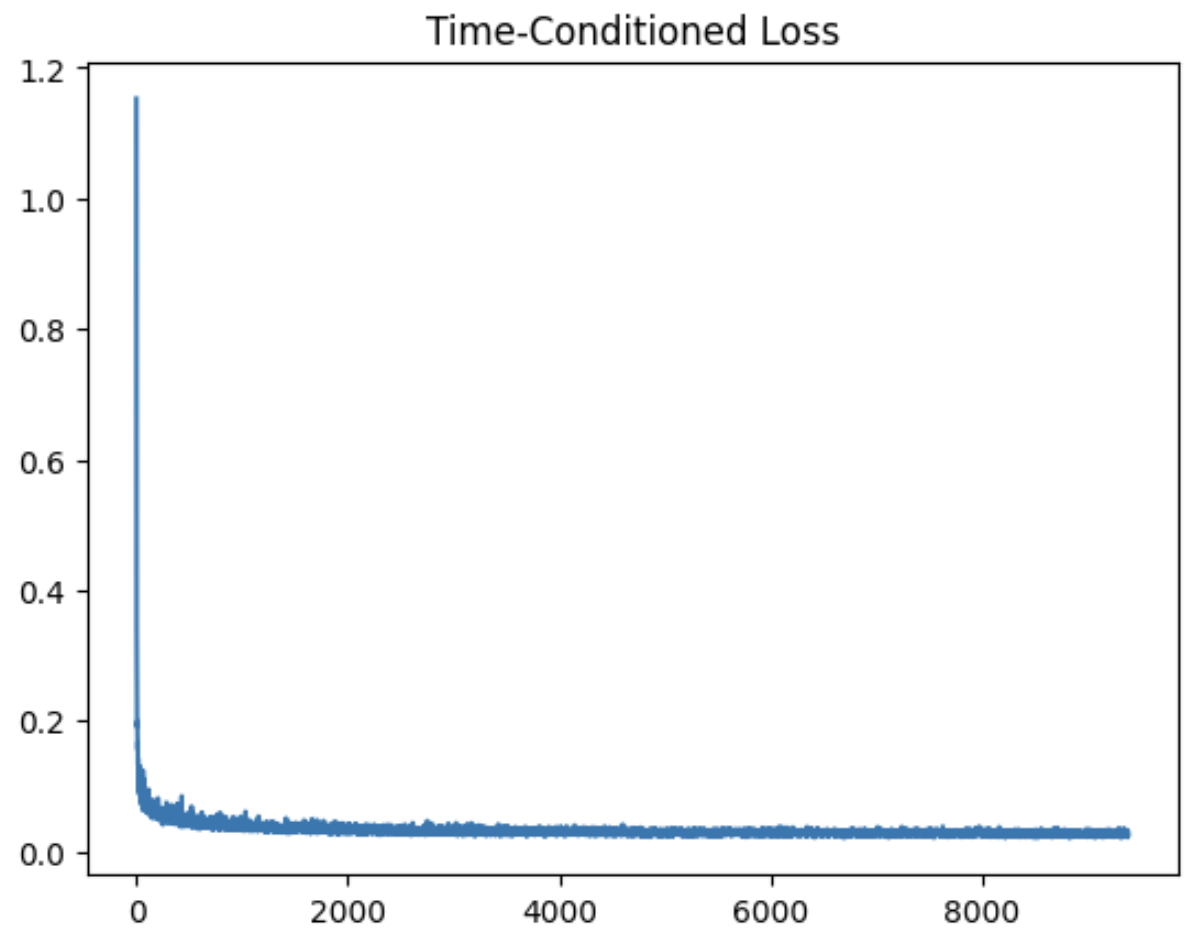

Time Conditioned

Building on hte UNet architecture form above, we now added Fully Connected Layers to condition on time. The time fraction was passed into these layers and appended to the unflattten and upblock1 layers.

Below is theresults at epoch 5

Below is theresults at epoch 20

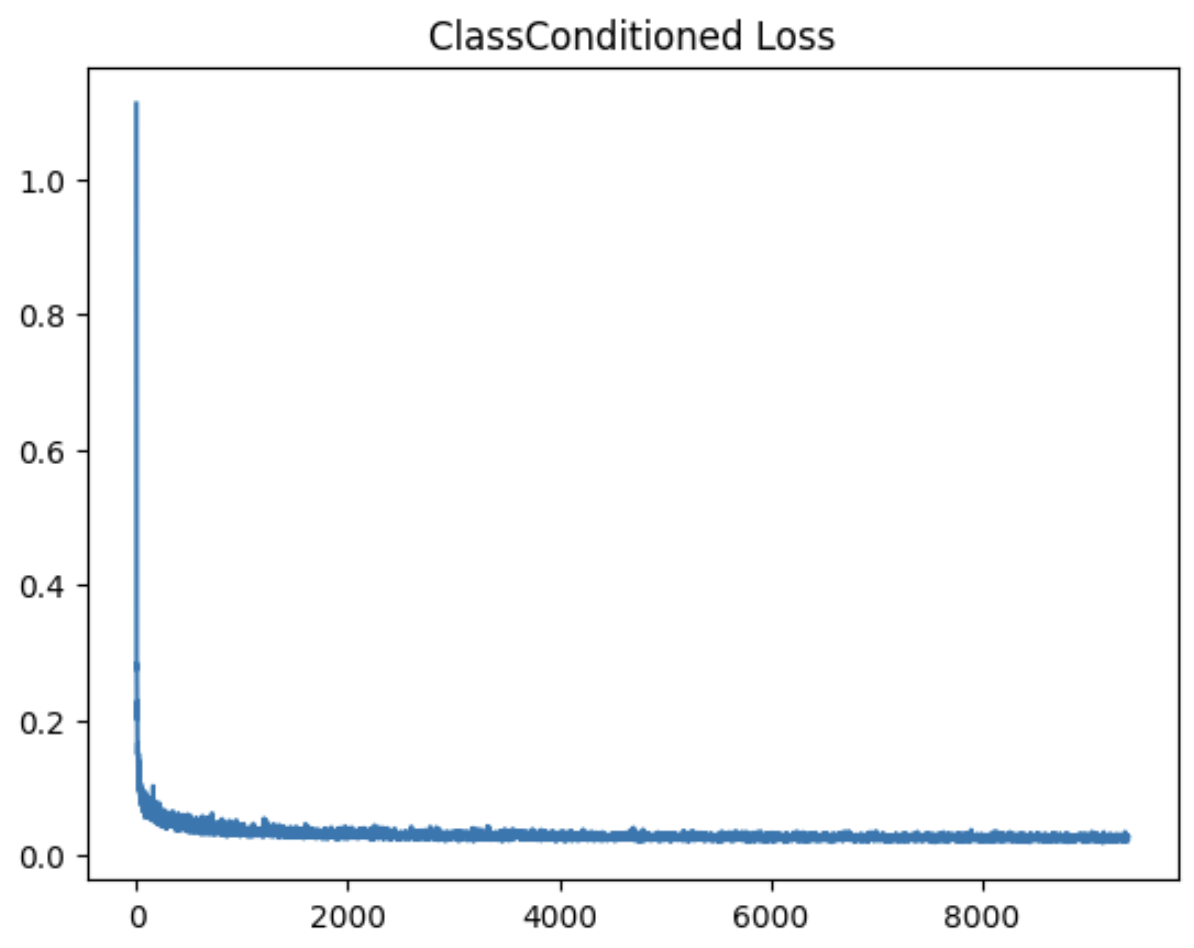

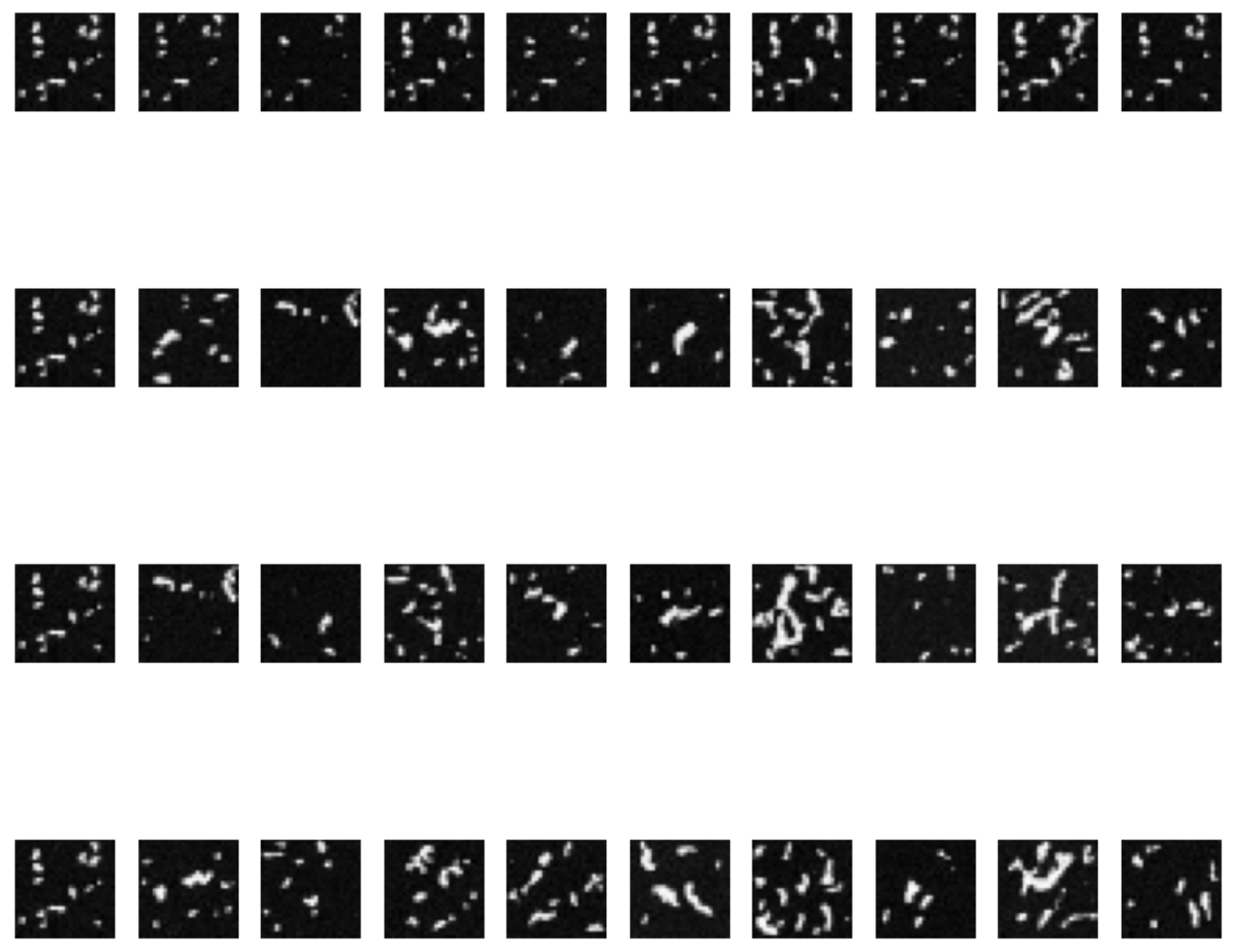

Class conditioned

Additional FCB layers were added to condition on class and multiplied to the same variable as time condition. This results in images that actually correspond to the numbers

Below is the results at epoch 5

Below is the results at epoch 20